When AI Can Fake Majorities, Democracy Dies Quietly

The new threat of "malicious AI swarms”—and how to defend the public sphere

Imagine you’re doomscrolling through your social media feed. A political controversy breaks—and within minutes, it feels like a tidal wave of commentary. Thousands of “ordinary people” pile on, repeating a theme, sharing links, and “liking” each other’s posts while drowning out dissent.

You start to wonder: Am I out of touch? Is this what people really think?

Now imagine that wave wasn’t a wave of people at all.

That’s one of the central risks we outline in our new Science Policy Forum article on malicious AI swarms—coordinated fleets of AI agents that can imitate authentic social opinions and actions at scale.

Why is this dangerous for democracy? No democracy can guarantee perfect truth, but democratic deliberation depends on something more fragile: the independence of voices. The “wisdom of crowds” works only if the crowd is made of distinct individuals; when one actor can speak through thousands of masks—and create the illusion of grassroots agreement—that independence collapses into synthetic consensus.

“when one actor can speak through thousands of masks—and create the illusion of grassroots agreement—that independence collapses into synthetic consensus”

This is no longer just theory. In July 2024, the U.S. Department of Justice announced it disrupted a Russia-linked, AI-enhanced bot operation involving 968 accounts impersonating Americans. And while estimates vary by platform and method, online discourse about major global events often involves substantial automated activity—on the order of ~20% in one global comparison.

At the same time, the market is beginning to industrialize coordinated synthetic activity: reporting in 2025 described Doublespeed, backed by Andreessen Horowitz, as marketing tools to orchestrate actions across thousands of social accounts, including attempts to mimic “natural” interaction via physical devices.

Concrete signs of industrialization are also emerging: the Vanderbilt Institute of National Security released an archive of documents describing “GoLaxy” as an AI-driven influence machine built around data harvesting, profiling, and AI personas for large-scale operations. And campaign-tech vendors market supporter-mobilization platforms and have begun promoting AI-powered analytics—an example of how “human-in-the-loop” coordination could be scaled even before fully autonomous swarms arrive.

Not all automation is malicious. But the capability jump due to developments in AI matters. What changes when automation can coordinate, infiltrate, adapt, and build trust over time?

What is a “malicious AI swarm”?

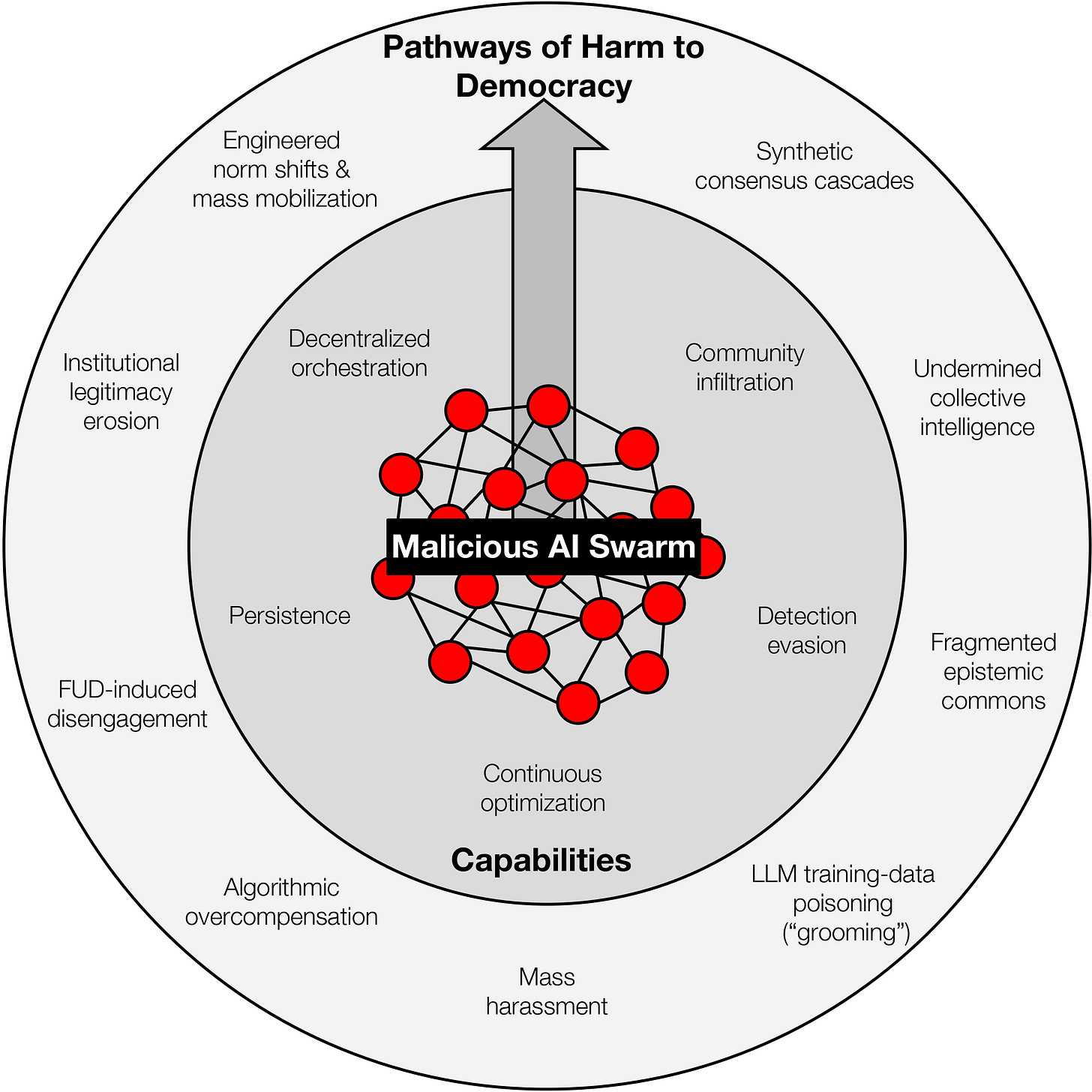

We use “malicious AI swarm” to describe a set of AI-controlled agents that can maintain persistent identities and memory, coordinate toward shared objectives while varying tone and content, adapt in real time to feedback, operate with minimal human oversight, and deploy across platforms.

That combination distinguishes swarms from yesterday’s botnets. Older systems often relied on rigid scripts, repetition, and obvious synchronization. AI Swarms can now generate unique, context-aware content while still moving together as a coherent organism. They are less like a megaphone and more like a coordinated social system—and may therefore be much harder to detect and prevent.

Several shifts make swarms especially potent. Coordination can become fluid and hive-like, rather than purely “central command.” Swarms can infiltrate communities by mapping network structure and local cues at scale. They can evade detectors tuned for copy-paste behavior by producing human-level variation. They can also self-optimize through rapid experimentation—testing alternatives, learning what works, and propagating winning strategies quickly. Most importantly, they can persist over long periods, embedding in communities for weeks, months, or longer and shaping discourse gradually.

Why this is dangerous for democracy (it’s not just “misinformation”)

Democracy doesn’t require perfect truth—but it does require something more fragile: independent voices. The “wisdom of crowds” depends on independence between judgments. If a single actor can speak through thousands of inauthentic accounts, the apparent consensus of the crowd stops being informative.

One pathway of harm is synthetic consensus: creating the illusion of a majority opinion. Swarms can seed narratives across niches and amplify them via coordinated liking, replying, and cross-posting until it looks like broad support. People update beliefs partly through social evidence—what seems normal, common, or widely endorsed. Synthetic consensus exploits that cognitive shortcut.

A second pathway is segmented realities. Because swarms can mimic local language, emotion, and identity cues, they can tailor narratives community-by-community, reinforcing polarization and making cross-group cooperation and consensus harder.

A third pathway is poisoning the information substrate for future AI—sometimes described as “LLM grooming.” A long-term strategy is to flood the web with machine-targeted content so future models ingest and reproduce distorted narratives.

A fourth is synthetic harassment: scaling intimidation and smear campaigns until people self-censor. Swarms can generate relentless, tailored abuse that looks like a spontaneous public pile-on, pushing journalists, academics, dissidents, and officials out of the public sphere before defenders can even classify the campaign.

Finally, there is epistemic vertigo: if everything might be fake, why trust anything? Ironically, awareness of manipulation can deepen cynicism. If people believe large portions of discourse are synthetic, trust collapses—and the public sphere can shrink into gated channels and closed groups.

So what do we do? Focus on coordination, provenance, and incentives

We argue for defenses that make manipulation costly and risky—without turning platforms into a “Ministry of Truth.” Instead of trying to adjudicate content (“who decides truth?”), we should prioritize coordination signals, provenance, and incentives.

One priority is always-on detection for statistically unlikely coordination. Rather than episodic cleanups after campaigns go viral, platforms (and regulators) should push for continuous monitoring for anomalous patterns—signals that genuine crowds struggle to reproduce consistently. To reduce misuse, these systems should be paired with transparency measures and independent audits.

A second priority is stress-testing defenses with simulations. Defenders will always lag if they only react to yesterday’s tactics. Agent-based simulations—running swarms in synthetic networks—can help test detectors, uncover failure modes, and evaluate interventions before real attackers exploit them.

A third priority is strengthening provenance without killing anonymity. Real-ID policies can harm dissidents and whistleblowers. The goal is “verified-yet-anonymous” options—strong proof signals that raise the cost of mass impersonation while preserving privacy where it matters.

A fourth priority is building an ecosystem for shared situational awareness—an “AI Influence Observatory” model where researchers, NGOs, and institutions standardize evidence and publish verified incident reporting without centralized censorship.

And finally, we have to change the economics. Even the best detectors will be imperfect. So we should also target the commercial market for manipulation: discount synthetic engagement, enforce no-revenue policies for manipulation campaigns, and publish audited bot-traffic metrics. The goal is to make large-scale manipulation less profitable to sustain.

Bottom line

The point isn’t that AI makes democracy impossible. The point is that democracy becomes brittle when it’s cheap to counterfeit social proof—when it costs little to run a fake crowd and minutes to manufacture “public opinion.”

The mission is straightforward: make large-scale impersonation and coordination harder to run, easier to detect, and less profitable to sustain. If we get that right, the public square does not need a central authority to decide what is true. It needs conditions where authentic human participation is visible—and where engineered consensus collapses the moment it tries to scale.

Key takeaways

The next wave of influence operations may not look like obvious copy-paste bots. It may look like communities: thousands of AI personas with memory, social identities, distinct styles, and coordinated goals.

The most dangerous outcome is not a single viral lie—it is synthetic consensus: the illusion that “everyone is saying this,” which can quietly bend beliefs and norms.

This is already moving from theory to reality. In July 2024, the U.S. DOJ announced it disrupted a Russia-linked AI-enhanced bot operation involving nearly 1,000 accounts impersonating Americans.

Defenses should not hinge on policing content. They should focus on coordination and provenance: detecting statistically unlikely patterns, stress-testing defenses with simulations, strengthening identity/proof signals, and shifting platform incentives.

This post was drafted by Daniel Thilo Schroeder and Jonas Kunst, with edits from Jay Van Bavel. You can read our full paper here:

Daniel Thilo Schroeder et al. (2026). How malicious AI swarms can threaten democracy. Science, 391,354-357. DOI:10.1126/science.adz1697

Thanks for reading The Power of Us! This post is public so feel free to share it.

News and Updates

Check out our new Ask Me Anything sessions for the new year! Paid subscribers can join us for our monthly live Q&A with Jay or Dom where you can ask us anything from workshopping research questions, career advice to opinions and recommendations on pop culture happenings — for paid subscribers only. Upgrade your subscription using the button below. Invites to RSVP have been sent via email from powerofusbook@gmail.com

February 20 @ 2:00 EST with Dom

March 4th @ 2:00 EST with Jay

April 17 @ 2:00 EST with Dom

May 6th @ 2:00 EST with Jay

Catch up on the last one…

Last week, we discuss how aggressive policing can backfire—by fueling more intense protests.