Can AI Help Us Escape Our Echo Chambers?

Issue 183: Our latest research provides evidence that people see AI as a neutral source of political information and trust it over in-group or out-group members

We have access to more knowledge than ever before. Yet, many people sucked into “echo chambers” that reinforce our existing beliefs. A key reason for this is that we often choose information sources based on social identity rather than accuracy. In the United States, Democrats prefer MSNBC or the New York Times while Republicans favor Fox News or the Wall Street Journal.

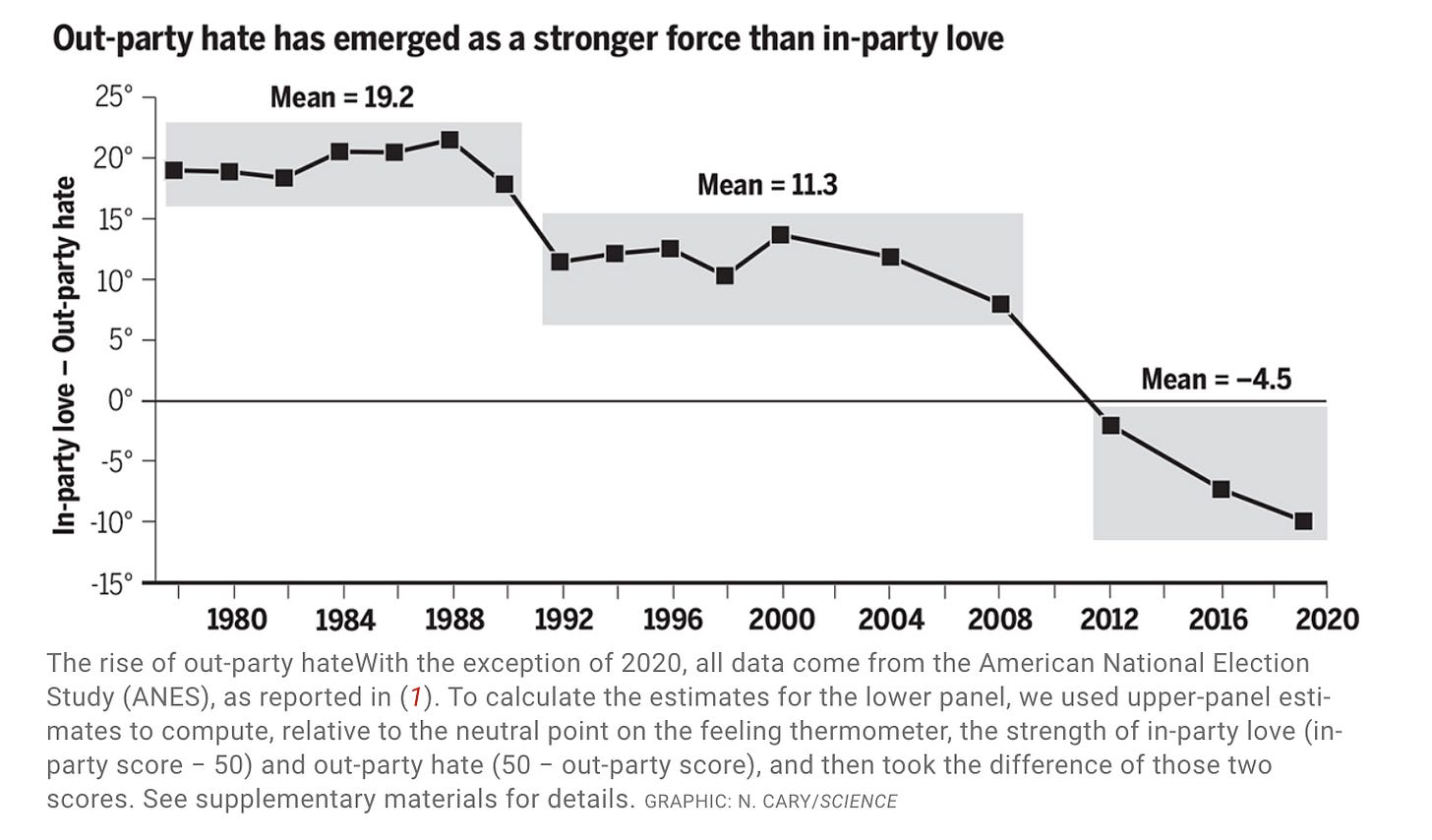

This tendency to avoid out-group sources narrows our perspectives, deepens political polarization, and can undermine trust in democratic institutions. This is part of a broader trend of political sectarianism, where the other side is seen not just as wrong, but as evil (as shown in the figure below). So, what can be done?

Our new research finds evidence for a fresh solution: Artificial Intelligence (AI) can help people bypass these deep-seated partisan biases

Nearly a billion people now use AI chatbots. And as tools like ChatGPT become more common, they are transforming how we access information. We reasoned that beca…